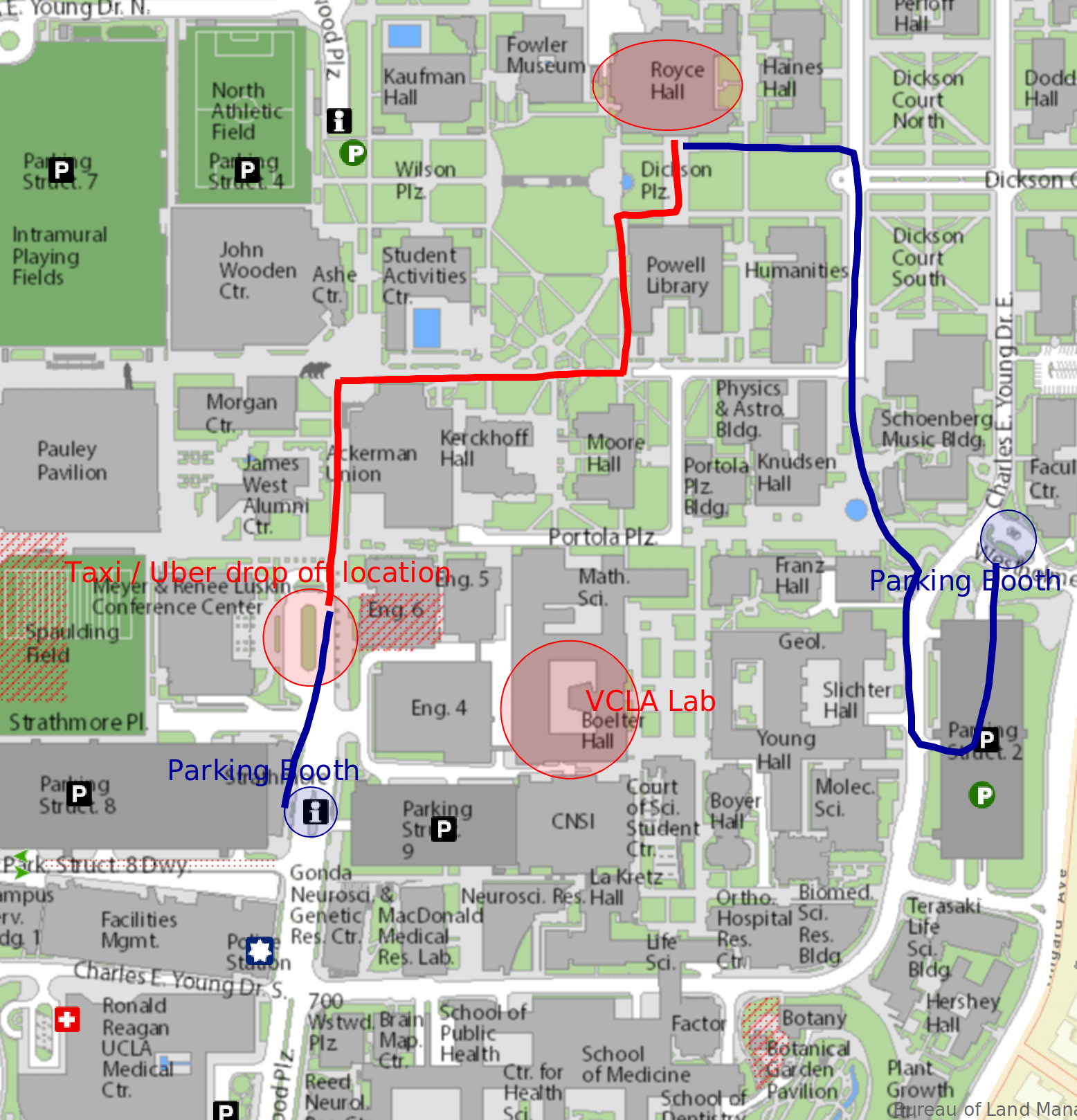

Team

Principal Investigator

-

Song-Chun Zhu

Professor of Statistics and Computer Science

UCLA

Director of the UCLA Center for Vision, Cognition, Learning and Autonomy

Research Interest:

Computer Vision, Statistical Learning, Cognition, AI, Robot Autonomy

Members in the US

-

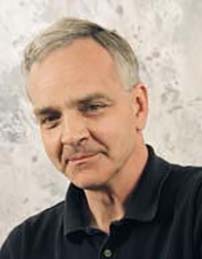

Martial Hebert

Professor of Robotics

Carnegie Mellon University

Director of the CMU Robotics Institute

Research Interest:

Computer Vision, Robotics and AI

Abhinav Gupta

Assistant Professor

Carnegie Mellon University

Research Interest:

Computer Vision and Lifelong learning

Nancy Kanwisher

Walter A. Rosenblith Professor of Cognitive Neuroscience

Department of Brain and Cognitive Sciences

MIT

Research Interest:

Cognitive Neuroscience

Josh Tenenbaum

Professor of Computational Cognitive Science

Department of Brain and Cognitive Sciences

MIT

Research Interest:

Cognitive modeling for perception, learning, reasoning in humans and machine

Fei-Fei Li

Associate Professor of Computer Science

Stanford University

Director of the Stanford Artificial Intelligence Lab

Research Interest:

Computer Vision, Neurosciences, and Artificial Intelligence

David Forsyth

Professor of Computer Science

University of Illinois at Urbana-Champaign

Research Interest:

Machine Learning, Graphics

Derek Hoiem

Associate Professor of Computer Science

University of Illinois at Urbana-Champaign

Research Interest:

General visual scene understanding

Brian Scholl

Professor of Psychology and Cognitive Science

Yale University

Chair of the Cognitive Science Program

Director of the Yale Perception & Cognition Laboratory

Research Interest:

Perception of Causality, Animacy, and Intentionality

Members in the UK

-

Philip Torr

Professor of Engineering

University of Oxford

Director of Torr Vision Group

Research Interest:

Computer Vision and Machine Learning

Philippe Schyns

Professor of Visual Cognition

University of Glasgow

Director of the Institute of Neuroscience and Psychology

Research Interest:

Rapid Scene Perception, Task-dependent categorization

Andrew Glennerster

Professor of Visual Neuroscience

University of Reading

Research Interest:

3D Scene Perception in high-fidelity immersive reality

Ales Leonardis

Chair Professor of Robotics

University of Birmingham

Co-Director of Computational Neuroscience and Cognitive Robotics Centre

Research Interest:

Computer Vision, Compositional Modeling, and Cognitive Robotics